Pioneering research and innovative solutions in spatial audio and acoustic signal processing

Reality AI Lab is at the forefront of audio research and development, pushing the boundaries of what's possible in sound technology. Our interdisciplinary team of expert engineers, scientists, and researchers is dedicated to creating immersive sound experiences that blur the line between virtual and reality.

With state-of-the-art facilities and a commitment to innovation, we're driving advancements in spatial audio, acoustic modeling, and psychoacoustic research. Our work spans from fundamental research to practical applications, shaping the future of audio technology.

Cutting-edge algorithms for spatial audio processing and real-time sound manipulation, leveraging machine learning and AI technologies.

Our lab is equipped with advanced technology for acoustic modeling, psychoacoustic testing, and immersive audio experiences, including a Cave Automatic Virtual Environment (CAVE).

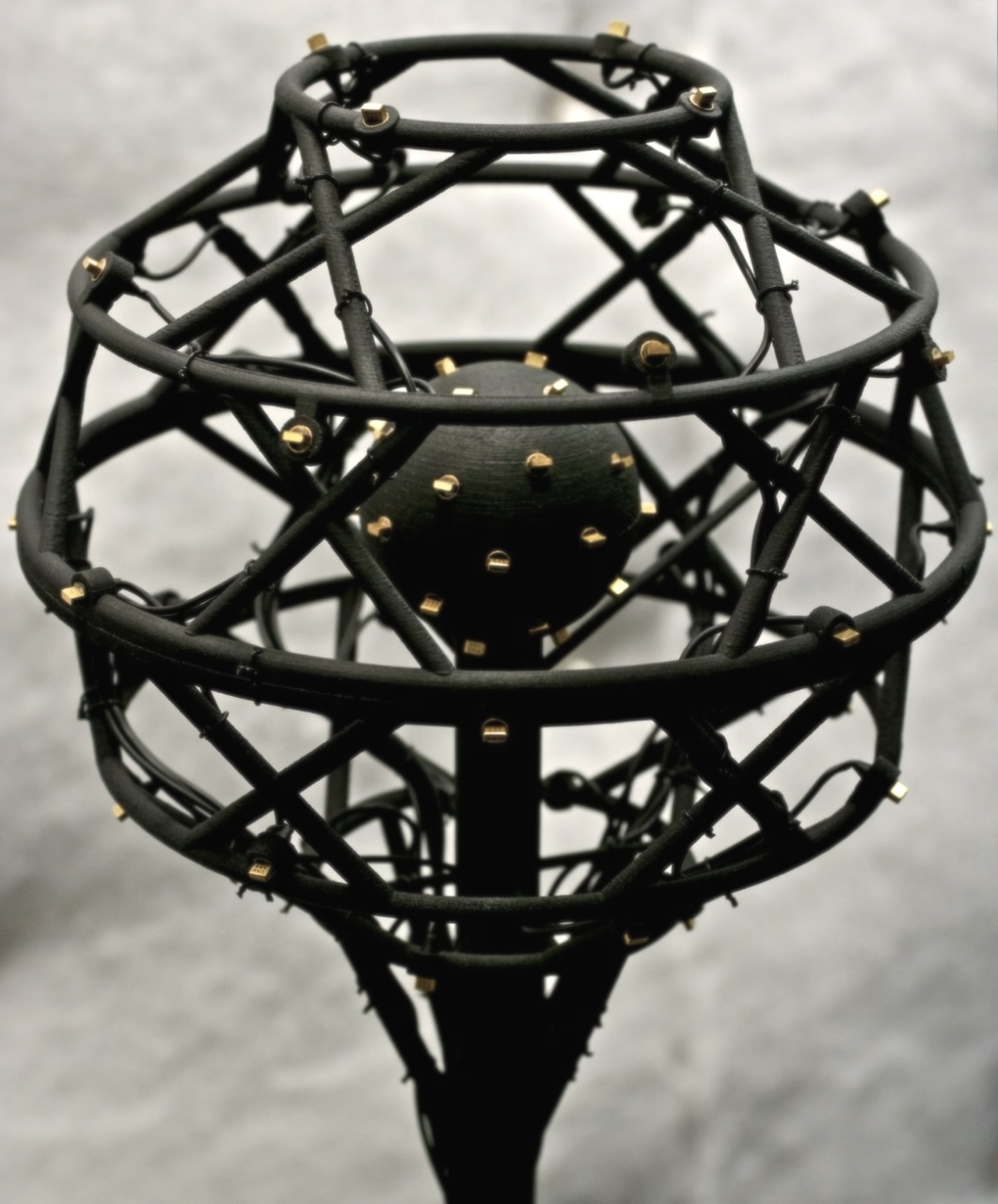

Ultra-wideband technology and custom spherical microphone arrays for accurate indoor positioning and spatial audio alignment.

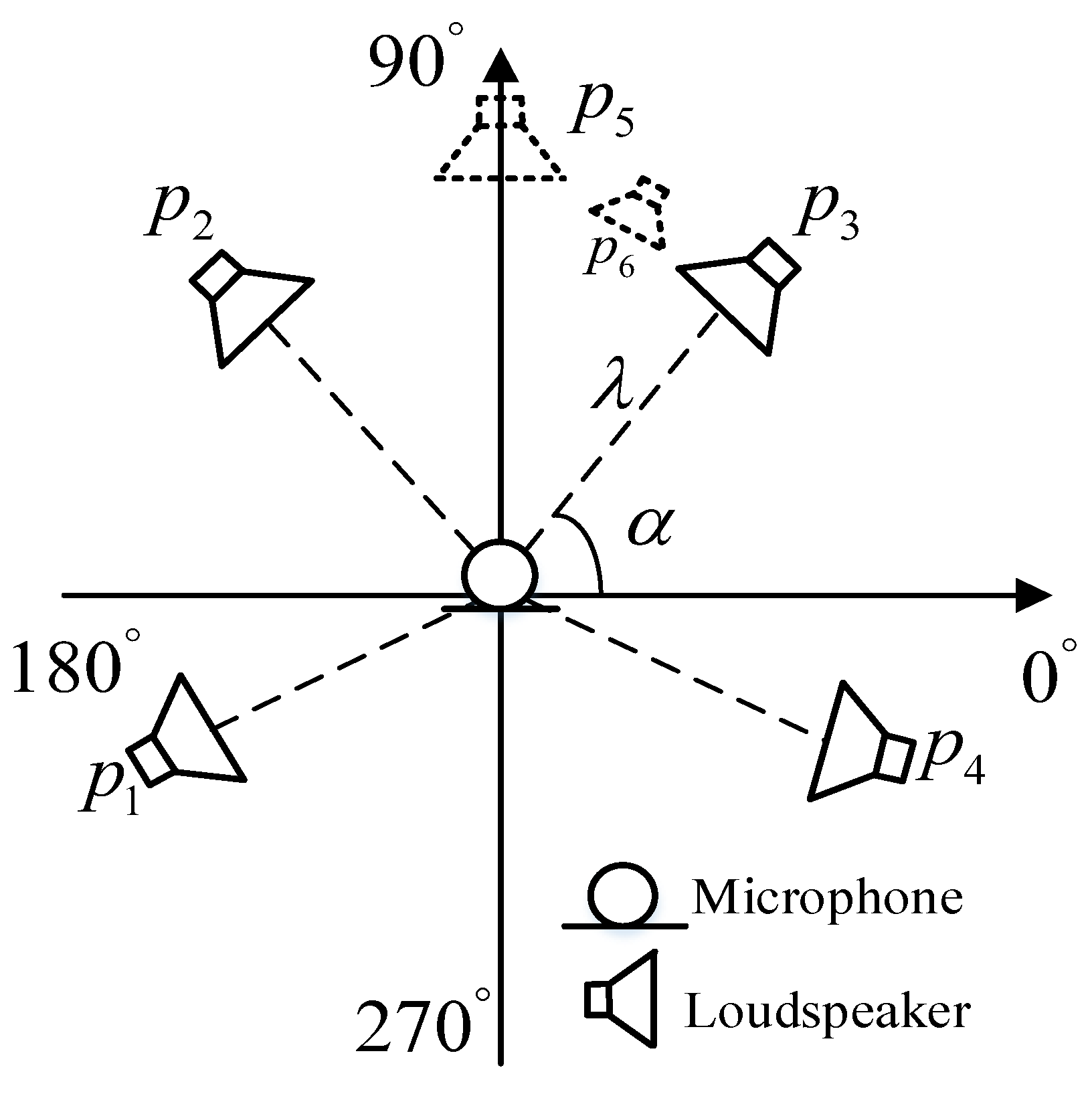

Investigating human spatial and directional hearing in various acoustic settings using our custom-built hemispherical speaker array with 91 individually controlled channels. Our team is currently exploring the impact of room reflections on sound source localization accuracy and developing novel algorithms to compensate for these effects in real-time rendering systems.

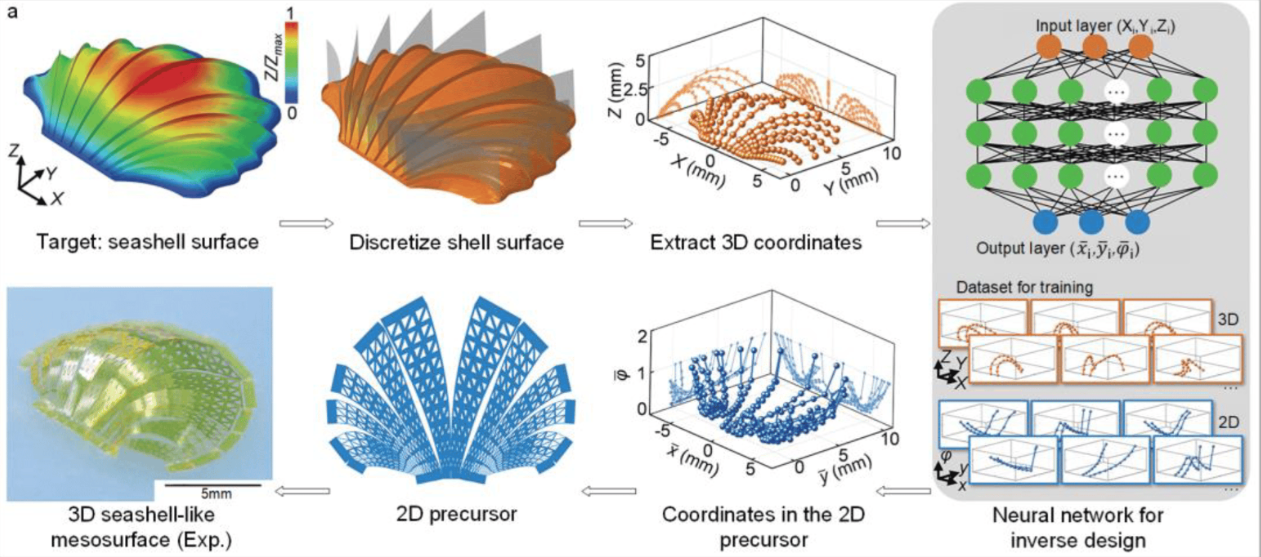

Developing machine learning models for real-time acoustic scene understanding and sound source separation in challenging environments. Our latest research focuses on multi-modal deep learning architectures that combine audio and visual cues for robust source localization and tracking in dynamic environments. We're also investigating the application of self-supervised learning techniques to improve generalization across diverse acoustic conditions.

Our team is developing advanced adaptive filtering algorithms for acoustic echo cancellation in complex multi-channel audio systems. We're investigating the use of frequency-domain adaptive filters combined with non-linear processing to handle non-stationary echo paths and near-end signal distortions. Our research aims to improve the quality of full-duplex audio communication in immersive environments, with a focus on minimizing processing latency and computational complexity.

Reality AI Lab fosters partnerships with leading academic institutions, industry players, and research organizations to drive innovation in audio technology. Our collaborative approach ensures that our research remains at the cutting edge of the field.